Note this blog builds on concepts found in the OCI 2024 Generative AI Professional Certification and Course. I added additional steps to resolve issues I encountered following the guides.

This course is fantastic & Oracle offer a free certificationpromotion through July 31, 2024.

The course describes how to use IDEs to build LLM applications through their Github Repository. This blog explains configuration of PyCharm with the examples, whilst my other blog explains how to set up Jupyter notebooks.

Note on WSL: I had to rework this blog as I wanted to use PyCharm on my WSL as I already had Python setup there for Web Scraping. However it became apparent that the PyCharm will only configure a WSL Interpreter when using the PyCharm Professional version. Therefore this blog is for PyCharm Community Edition.

Note on OCI Regions: According to here. At time of writing, Text Generational Models are only available in US Midwest (Chicago). These code examples is this blog will only work in US Midwest (Chicago). My region is Germany Central (Frankfurt) and only supports Chat - I have, however, adapted the scripts somewhat to Chat Models (see the final section). If you want to read more about this, I recommend reading this discussion.

This guide is for Windows, you'll need to adapt this for other OS's

Setup OCI key

Window Key + R% and type %USERPROFILE%

Create an .oci folder

In OCI, Click Profile > My Profile

Click API Keys > Add API Key > Download API Key

Don't touch anything in OCI yet

Copy the file to %USERPROFILE%\.oci

Back in OCI click Add

Don't touch anything in OCI yet, keep the window open

Copy the info from OCI to clipboard

Save the contents of the clipboard to a new file called %USERPROFILE%\.oci\config

Change the last line to (change as necessary)

key_file=c:\full\path\to\folder\.oci\oci_api_key.pemMy folder looks like this

Setup PyCharm

Download and install Visual C++ Build tools

Modify the installation by selecting this workload

Install Python. In Windows, drop into a dos-box and type

pythonDownload & Install PyCharm Community Edition

Run PyCharm

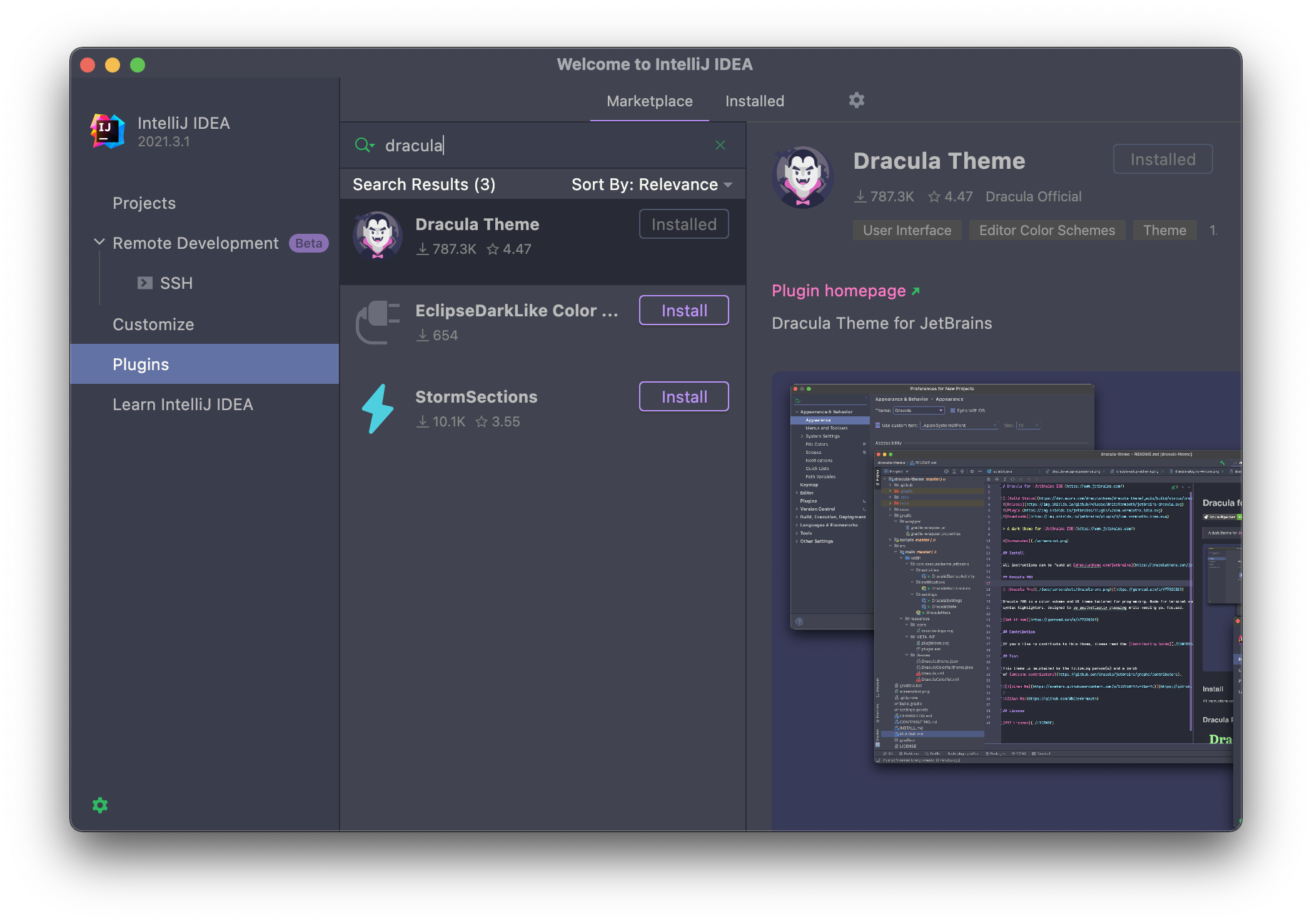

Optional/Recommended: Dracula Theme: Go to

Plugin Marketplace, and searchDracula, clickInstall

Create a new Project you can tick Welcome Script

Test Python by running the main.py script using the green play/run link in the top middle of the picture. It will say

Hi PyCharmin the output window.

Next to the run button, it says main, change this to Current File

Click Terminal

Paste in the following to install the dependencies in the terminal box

pip install oci pip install oracle-ads pip install langchain pip install chromadb pip install faiss-cpu pip install streamlit pip install python-multipart pip install pydantic pip install pypdf # Matts Additions pip install langchain_community

Test OCI package

We will test the OCI package since we have just installed it.

Create a file in your .venv folder called test-oci.py and paste in the following

import oci # Load the default configuration config = oci.config.from_file() # Initialize the IdentityClient with the configuration identity = oci.identity.IdentityClient(config) # Fetch and print user details to verify the connection user = identity.get_user(config["user"]).data print("User details: ", user)Run the file SHIFT+F10 If it works, you should see some JSON like this

"inactive_status": null, "is_mfa_activated": true, "last_successful_login_time": "2024-06-14T08:54:58.148000+00:00", "lifecycle_state": "ACTIVE", "name": "mmulvaney@leedsunited.com", "previous_successful_login_time": null, "time_created": "2022-11-01T14:50:28.449000+00:00" } Process finished with exit code 0

Configure Chatbot Code

Download the repository as a zip file - click this link

Unzip the contents to your .venv folder

Right-click the project > Reload from Disk

It should look like this

Download some PDFs. I'm using this PDF (Yes, I do own all these consoles)

Add them to new pdf-docs folder, which should be a sub-folder of module4

Change the URL (Chicago, Frankfurt or other) and the Compartment ID

If you don't know how to obtain the Compartment, it should be visible in Playground > View Code > Python

Run the file and check you have a non-zero number of documents loaded into chromadb

Note: You get this warning as specifically, as of Chroma version 0.4.x, documents are automatically persisted, and the manual

persist()method is deprecated. Therefore you can remove thepersist()code or ignore this.Run the chroma server

chroma run --path .venv\ou-generativeai-pro-main\demos\module4\chromadbOpen demo-ou-chatbot-chroma-final.py in PyCharm

Change the two occurrences of URL (Chicago, Frankfurt or other) and the Compartment ID

Open another Terminal (use the + button) - i.e keep chroma server running

Start the chatbot and hit Enter when it asks for your email

streamlit run .venv\ou-generativeai-pro-main\demos\module4\demo-ou-chatbot-chroma-final.pyThis will start your Chatbot

Ask it...

What consoles does Matt Mulvaney own?ENJOY!

If you see this error... then Text Generation is not available in your Model. Therefore read the next section on Streamlit Chatting

Note: That you can also run examples from the Playground. Here's an example of me asking what the Nintendo Wii is:

Streamlit Chatting

Note: Only do this if you hit the error above and want to use to use Streamlit with the Chatting Service on Frankfurt or Chicago

Note: this code sample does not use memory for conversation history or chroma for RAG. You are very welcome to provide improvements to this code and I will update this blog.

Create a file in your .venv folder called test-oci.py and paste in the following

You have to change the:

Model ID: Click View Model Details and then copy the OCID

Compartment ID: It should be visible in Playground > View Code > Python

import streamlit as st

import oci

# Step 1: Initialize OCI Generative AI Inference Client

def create_chain():

config = oci.config.from_file('~/.oci/config', 'DEFAULT')

endpoint = "https://inference.generativeai.eu-frankfurt-1.oci.oraclecloud.com"

generative_ai_inference_client = oci.generative_ai_inference.GenerativeAiInferenceClient(

config=config,

service_endpoint=endpoint,

retry_strategy=oci.retry.NoneRetryStrategy(),

timeout=(10, 240)

)

def invoke_chat(user_message):

chat_request = oci.generative_ai_inference.models.CohereChatRequest()

chat_request.message = user_message

chat_request.max_tokens = 600

chat_request.temperature = 1

chat_request.frequency_penalty = 0

chat_request.top_p = 0.75

chat_request.top_k = 0

chat_detail = oci.generative_ai_inference.models.ChatDetails()

chat_detail.serving_mode = oci.generative_ai_inference.models.OnDemandServingMode(

model_id="[MODEL ID]"

)

chat_detail.chat_request = chat_request

chat_detail.compartment_id = "[COMPARTMENT]"

chat_response = generative_ai_inference_client.chat(chat_detail)

return chat_response # Return the whole chat_response object

return invoke_chat

# Step 2: Define Streamlit UI

if __name__ == "__main__":

chain = create_chain()

st.subheader("Pretius: Chatbot powered by OCI Generative AI Service")

user_input = st.text_input("Ask me a question")

if user_input:

bot_response = chain(user_input)

if bot_response.status == 200:

# Ensure bot_response is correctly accessed based on actual structure

chat_response = bot_response.data.chat_response # Assuming chat_response is within data attribute

if chat_response:

st.write("Question: ", user_input)

st.write("Answer: ", chat_response.text) # Adjust based on actual structure

else:

st.write("Unexpected response format from OCI Generative AI Service.")

else:

st.write("Error communicating with OCI Generative AI Service.")

Start the Chatbot

streamlit run .venv\ou-generativeai-pro-main\demos\module4\demo-pretius-working.py

Enjoy your Chatbot

Deploy the Chatbot

To Deploy the Chatbot, read the OU ChatBot Setup-V1.pdf document found in the module4 folder.

ENJOY

What's the picture? Swans at Golden Acre Park, Leeds. Visit Yorkshire!