This blog was written for #JoelKallmanDay

I loved being around Joel, and I know he liked a nice Beer 🍺. So how about I take the immense power of Oracle Cloud and the amazing latest features of Oracle APEX, and ... um... scrape the web for the best Beer deals? - why not!?

Discount Beer? yes, I'm sick of missing the best deals and them selling out quickly. To counter this... I'm using an Oracle Cloud Compute with the Scrapy Python engine to run a spider to crawl a brewery website to retrieve all their products in JSON format. If a new product appears or a sudden price reduction of an existing product takes it below the magical 0.5 pence per ml, then APEX will send me a Push Notification on my Phone... oh and I'll create a tidy APEX application to browse my beers as well.

Whats Scrapy?

I researched for the best web scraping tool around... and the resounding answer was https://scrapy.org/ which you can build and run your web scraping spiders.

I took a quick tutorial and now my entire web scraping knowledge comes from this 23 minute video which I highly recommend you watch.

You'll see my scrapy code for the brewery website I'm using. I won't explain how it works because that video explains it way better than I could in this short blog.

Install Scrapy For Oracle Unix or WSL

I installed it on both as I could Develop scrapy in VSCode in WSL on my Windows box, and then deploy in "production" on my mirco (always FREE) Oracle Unix Compute box.

Find your processor by using

uname -mMine is

x86_64so I will run the code below to download Miniconda. This is a tool that will install Scrapy for uscurl -O https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh chmod +x Miniconda3-latest-Linux-x86_64.sh ./Miniconda3-latest-Linux-x86_64.shPress ENTER

Exit Reading with CTRL+C

Type yes to accept licence terms

Press ENTER to confirm the location

Type yes to perform a conda init

Type exit to exit Terminal

Start another Terminal Session

Type the following to confirm the conda version

conda --versionType the following to install Scrapy

conda install -c conda-forge scrapyType y to proceed

Make sure nano is installed too

sudo install nano -y

Brewery Project

Start a scrapy project

scrapy startproject ThornbridgeThis has created a set of folders

Create a spider

nano Thornbridge/Thornbridge/spiders/thornbridge_spider.pyPaste in the following. If you want to know how I wrote this scrapy code, you have to watch the video I posted at the top of this blog.

import scrapy

class ThornbridgeSpider(scrapy.Spider):

name = "thornbridge"

start_urls = ["https://thornbridgebrewery.co.uk/collections/beercases",

"https://thornbridgebrewery.co.uk/collections/flash-sales",

"https://thornbridgebrewery.co.uk/collections/mixed-cases"]

def parse(self, response):

products = response.css("li.grid__item")

for product in products:

product_name = product.css("a.full-unstyled-link::text").get()

price = product.css("span.price-item.price-item--regular::text").get()

price_regular = product.css("div.price--on-sale div.price__sale s.price-item.price-item--regular::text").get()

price_sale = product.css("span.price-item.price-item--sale::text").get()

sold_out = product.css("div.price.price--sold-out::text").get()

image = product.css("div.card__media div.media img").attrib['src']

link = product.css("a.full-unstyled-link").attrib['href']

# sold_out = False

if sold_out:

sold_out = True

else:

sold_out = False

price = price.replace("£", "").replace(" GBP", "")

if price_regular is None:

price_regular = price

else:

price_regular = price_regular.replace("£", "").replace(" GBP", "")

yield {

"Name": product_name.strip(),

"Image": "https:" + image,

"Link": "https://thornbridgebrewery.co.uk" + link,

"Price": price.strip(),

"Regular": price_regular.strip(),

"Sold_Out": sold_out

}

# Follow pagination links if available (assuming they use the "Next" button)

next_page = response.css("a.pagination__item--prev::attr(href)").get()

if next_page:

yield response.follow(next_page, self.parse)

CTRL+X to save and exit nano

Create a Schema

Create a beer_feed table to store the incoming feeds

CREATE TABLE "BEER_FEED" ( "ID" NUMBER GENERATED BY DEFAULT ON NULL AS IDENTITY MINVALUE 1 MAXVALUE 9999999999999999999999999999 INCREMENT BY 1 START WITH 1 CACHE 20 NOORDER NOCYCLE NOKEEP NOSCALE NOT NULL ENABLE, "FEED_DATE" DATE DEFAULT ON NULL SYSDATE NOT NULL ENABLE, "FEED_JSON" CLOB, "STATUS" VARCHAR2(60 CHAR) DEFAULT ON NULL 'NEW' NOT NULL ENABLE, CHECK (feed_json is json) ENABLE, CONSTRAINT "BEER_FEED_ID_PK" PRIMARY KEY ("ID") USING INDEX ENABLE )Create a beer table to hold the beer, but linked to the feed.

-- create tables create table beer ( id number generated by default on null as identity constraint beer_id_pk primary key, beer_feed_id number constraint beer_beer_feed_id_fk references beer_feed on delete cascade, name varchar2(256 char), quantity number, volume number, vessel varchar2(32 char), image varchar2(4000 char), link varchar2(4000 char), price number, regular number, sold_out varchar2(5 char), price_per_ml number ) ; -- table index create index beer_i1 on beer (beer_feed_id);Create a trigger to calculate the price per ml

create or replace trigger "BEER_T" before insert on "BEER" REFERENCING NEW AS NEW OLD AS OLD for each row begin :NEW.price_per_ml := ROUND( :new.PRICE / (:new.QUANTITY * :new.VOLUME ), 5 ); end; /Create a Procedure to process the feed & send notifications if the price per millimetre is favourable for reduced or new products.

YOU MUST CHANGE THE APP_ID CONSTANT AT THE TOP OF THE FILE

create or replace PROCEDURE P_PROCESS_BEER_FEED(p_feed_id beer_feed.id%TYPE)

AS

l_app_id_c CONSTANT apex_applications.application_id%TYPE DEFAULT 109;

l_target_price_per_ml CONSTANT NUMBER DEFAULT 0.005;

BEGIN

-- Insert new records from JSON into the beer table

INSERT INTO beer (beer_feed_id, name, quantity, volume, vessel, image, link, price, regular, sold_out)

SELECT

p_feed_id,

jt.Name AS Name,

CASE

WHEN REGEXP_SUBSTR(jt.Name, '(\d+)x') IS NOT NULL THEN REPLACE( REGEXP_SUBSTR(jt.Name, '(\d+)x'), 'x' )

WHEN REGEXP_SUBSTR(jt.Name, '(\d+)\s*x') IS NOT NULL THEN REGEXP_SUBSTR(jt.Name, '(\d+)\s')

WHEN REGEXP_SUBSTR(jt.Name, '(\d+)\s+Beers') IS NOT NULL THEN REGEXP_SUBSTR(jt.Name, '(\d+)\s')

ELSE REGEXP_SUBSTR(jt.Name, '(\d+)\s*ml')

END AS quantity,

REPLACE(

CASE

WHEN REGEXP_SUBSTR(name, '(\d+)\s*ml') IS NOT NULL THEN REGEXP_SUBSTR(name, '(\d+)\s*ml')

ELSE NULL

END, 'ml', '') AS volume,

CASE

WHEN REGEXP_SUBSTR(name, 'cans|bottles|Bottles|Cans') IS NOT NULL THEN LOWER(REGEXP_SUBSTR(name, 'cans|bottles|Bottles|Cans'))

ELSE NULL

END AS vessel,

jt.Image AS Image,

jt.Link AS Link,

TO_NUMBER(jt.Price) AS Price,

TO_NUMBER(jt.Regular) AS Regular,

jt.Sold_Out AS Sold_Out

FROM

beer_feed,

JSON_TABLE(feed_json, '$[*]'

COLUMNS (

Name VARCHAR2(255) PATH '$.Name',

Image VARCHAR2(255) PATH '$.Image',

Link VARCHAR2(255) PATH '$.Link',

Price VARCHAR2(20) PATH '$.Price',

Regular VARCHAR2(20) PATH '$.Regular',

Sold_Out VARCHAR2(5) PATH '$.Sold_Out'

)

) jt

WHERE beer_feed.id = p_feed_id;

-- Delete duplicate records based on the link

DELETE FROM beer

WHERE ROWID NOT IN (

SELECT MAX(q.ROWID)

FROM beer q

WHERE q.beer_feed_id = p_feed_id

GROUP BY link

HAVING COUNT(*) > 1

);

-- Start sending out notifications

FOR x IN (

WITH

latest AS (SELECT b.* FROM beer b, beer_feed f WHERE b.beer_feed_id = f.id AND status = 'LATEST'),

previous AS (SELECT b.* FROM beer b, beer_feed f WHERE b.beer_feed_id = f.id AND status = 'PREVIOUS'),

interesting AS (

SELECT l.*

FROM latest l, previous p

WHERE l.link = p.link

AND l.price < p.price

AND l.sold_out = 'false'

UNION

SELECT l.*

FROM latest l

WHERE l.link NOT IN (SELECT p.link FROM previous p)

AND EXISTS ( SELECT p.link FROM previous p )

)

SELECT *

FROM interesting

WHERE PRICE_PER_ML <= l_target_price_per_ml

)

LOOP

for l_subscription in (

select distinct user_name

from apex_appl_push_subscriptions

where application_id = l_app_id_c )

loop

IF V('SESSION') IS NULL

THEN

apex_session.create_session (

p_app_id => l_app_id_c,

p_page_id => 1,

p_username => 'EXAMPLE USER' );

END IF;

apex_pwa.send_push_notification (

p_application_id => l_app_id_c,

p_user_name => l_subscription.user_name,

p_title => 'Thornbridge Discount',

p_body => x.name,

p_target_url => x.link);

end loop;

END LOOP;

END P_PROCESS_BEER_FEED;

/

REST Endpoint

In APEX, go to RESTful Services

Create a Module called scrapy with a Base Path of /scrapy/

Create a Resource Template called feed

Create a Handler of type POST with a PL/SQL of

DECLARE l_feed_id beer_feed.id%TYPE DEFAULT NULL; BEGIN UPDATE beer_feed SET status = 'HISTORIC' WHERE status = 'PREVIOUS'; UPDATE beer_feed SET status = 'PREVIOUS' WHERE status = 'LATEST'; INSERT INTO beer_feed( feed_json, status) VALUES (:BODY, 'LATEST') RETURNING id INTO l_feed_id; p_process_beer_feed(l_feed_id); END;Create a Privilege called scrapy and protect the scrapy module like this

Click Create Privilege

Create a Client

BEGIN OAUTH.create_client( p_name => 'scrapy_client', p_grant_type => 'client_credentials', p_owner => 'Myself', p_description => 'A client for Scrapy', p_support_email => 'me@example.com', p_privilege_names => 'scrapy' ); COMMIT; END; /Fetch the Client ID and Client Secret and save in Notepad

SELECT client_id, client_secret FROM user_ords_clients WHERE name = 'scrapy_client'

Create a shell script to push to the End Point

create a file

nano /home/opc/scrape_and_post.shPaste in the contents below. Make sure you change the CLIENT_ID, CLIENT_SECRET and the URL (this appears twice).

#!/bin/bash # Enter project folder cd /home/opc/Thornbridge # Scrape to beer.json scrapy crawl thornbridge -O beer.json # Define your client ID and client secret CLIENT_ID="uEw.." CLIENT_SECRET="pRw.." # Get the access_token and store it in a variable ACCESS_TOKEN=$(curl -s -k --user "$CLIENT_ID:$CLIENT_SECRET" --data "grant_type=client_credentials" https://g4-lufatp.adb.eu-frankfurt-1.oraclecloudapps.com/ords/tb/oauth/token | grep -o '"access_token":"[^"]*' | cut -d'"' -f4) # Use the token in the second curl command curl \ --location 'https://g4-lufatp.adb.eu-frankfurt-1.oraclecloudapps.com/ords/tb/scrapy/feed' \ --header 'Content-Type: application/json' \ --header "Authorization: Bearer $ACCESS_TOKEN" \ --data @beer.jsonCTRL+X to save the file and exit nano

Make it executable

chmod +x scrape_and_post.shRun it once like this. Or run it as often as you wish with the below command.

./scrape_and_post.shSchedule it

export EDITOR=nano; crontab -ePaste this on the first line to run the scrape daily

0 0 * * * /bin/bash /home/opc/scrape_and_post.shCTRL+X to save the file and exit nano

Check to see if it is scheduled

crontab -l

APEX Application

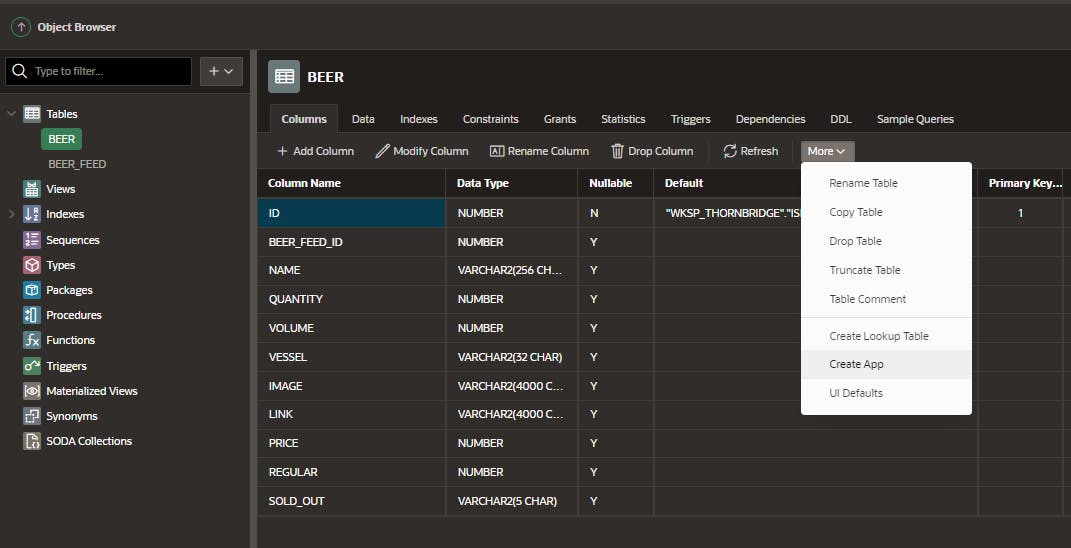

In Object Browser, create an Application from the beer table

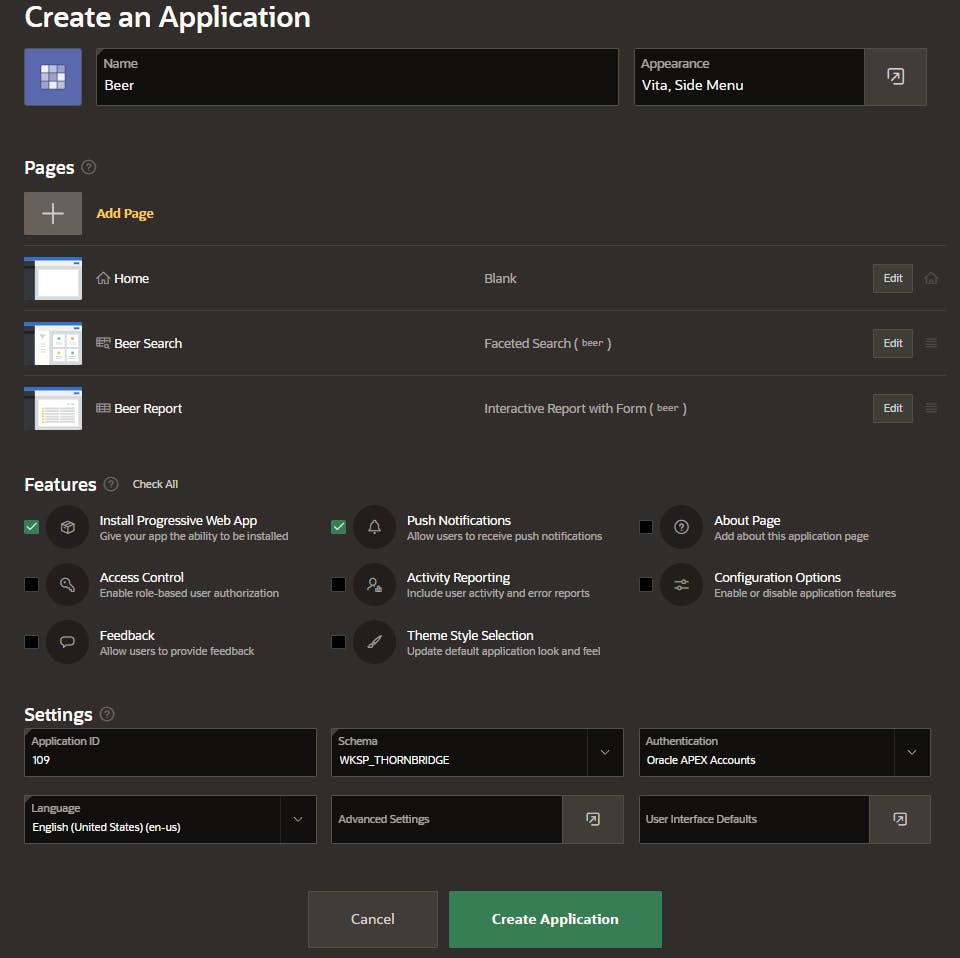

Select Install Progressive Web App and Push Notifications and click Create Application

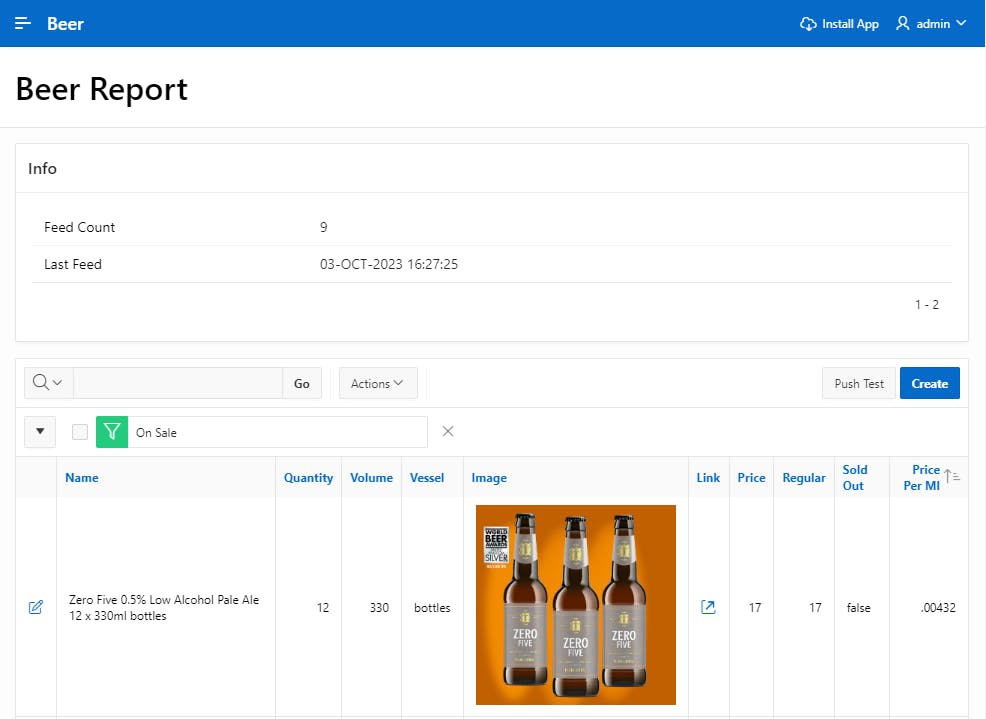

Run the application. I've modified mine a little, however, you should see an Interactive Report

Click Your Username > Settings and turn Push Notifications on

(Optional) Install the app on your device and also turn Push Notifications on

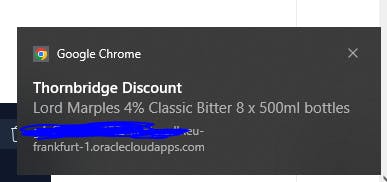

Wait for beer discounts to appear on your phone!

Whats the picture? Its Crimple Valley Viaduct. Visit Yorkshire!